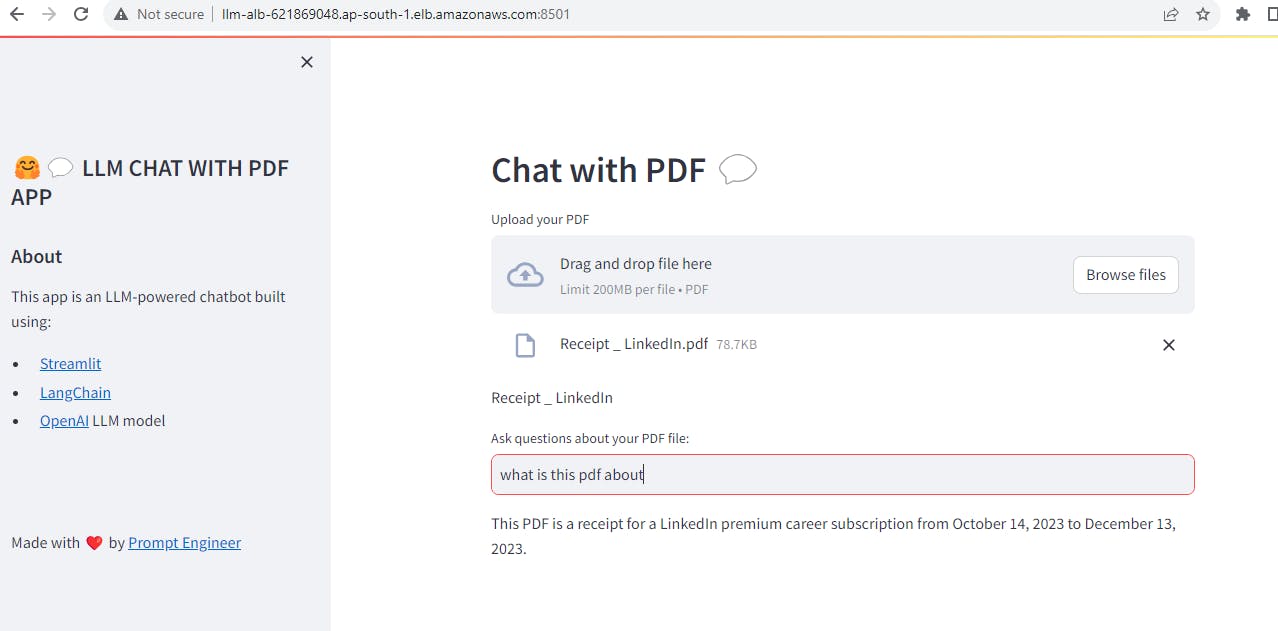

In this blog, we will be deploying a ChatGPT-Powered PDF Assistant developed with Langchain and Streamlit in AWS ECS Fargate.

This is the application developed by the YouTube channel Prompt Engineering.

All the source code and Dockerfile you can get it from the above YouTube channel or my GitHub link.

Amazon Elastic Container Service:

Amazon Elastic Container Service (Amazon ECS) is a fully managed container orchestration service that helps you easily deploy, manage, and scale containerized applications. There are three layers in Amazon ECS:

Capacity - The infrastructure where your containers run. It can be Amazon EC2 instances, AWS Fargate and On-premises virtual machines (VM) or servers.

Controller - Deploy and manage your applications that run on the containers

Provisioning - The tools that you can use to interface with the scheduler to deploy and manage your applications and containers

To deploy applications on Amazon ECS, our application components must be configured to run in containers. So we will create a Dockerfile for our application and push it to ECR or DockerHub. Here I will be pushing it to my DockerHub account.

Dockerfile:

Here's what the Dockerfile looks like. For the requirements.txt and model python file please get it from my GitHub Account.

FROM python:3.10

# copy the requirements file into the image

COPY requirements.txt /app/requirements.txt

# switch working directory

WORKDIR /app

RUN pip install --upgrade pip

RUN pip install wheel

RUN pip install cmake

RUN pip uninstall cmake -y

RUN pip install pyportfolioopt

# install the dependencies and packages in the requirements file

RUN pip install -r requirements.txt

ENV OPENAI_API_KEY=open-api-key

# copy every content from the local file to the image

COPY model.py /app

RUN echo "OPENAI_API_KEY=$OPENAI_API_KEY" > .env

CMD [ "streamlit","run","model.py","--server.port", "8501" ]

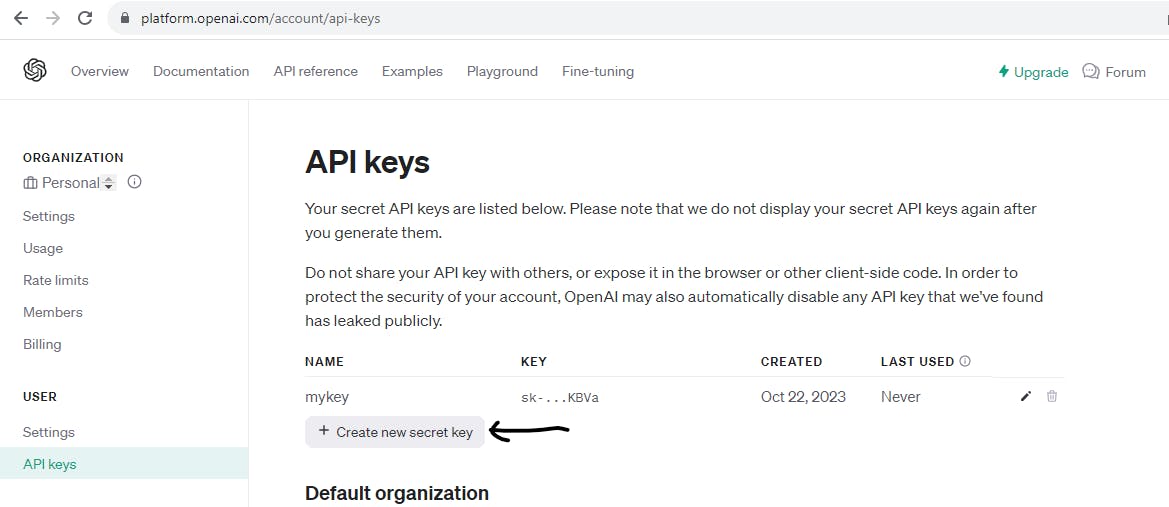

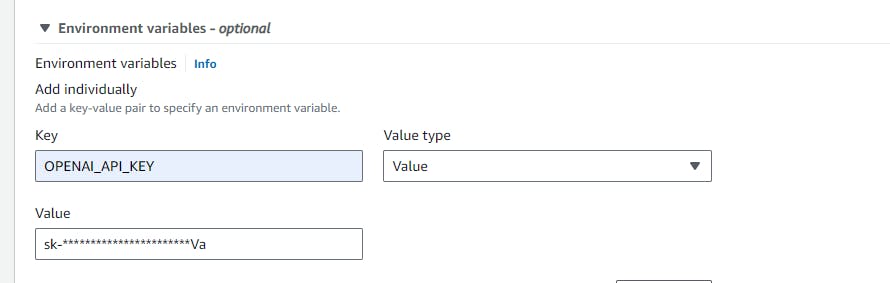

Here we need an OpenAI API key which you can create from your openai platform as shown below. Copy the key and save it somewhere, we will be passing it as an environment variable while creating task definitions for ECS services.

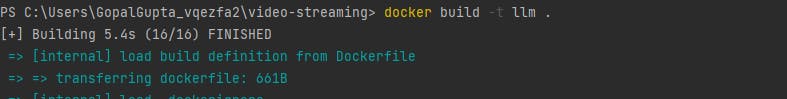

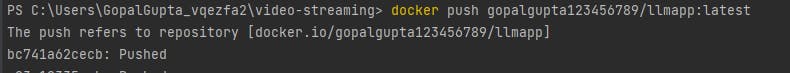

Once it is done, let's build the docker image from the Dockerfile and push it to the DockerHub.

Run the below commands to build an image, tag your images and push it to the docker hub.

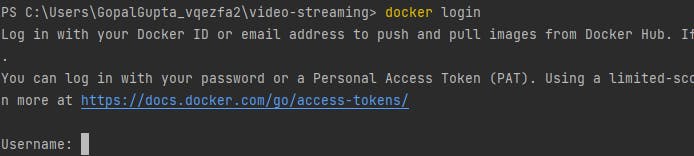

I already have a DockerHub account and have created one repository to push my image from the local system to Hub. You can use the docker login command to connect to your account.

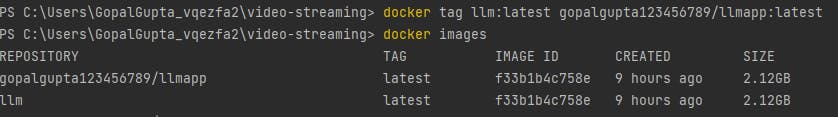

After you successfully log in. let's tag our local image to the repository created on DockerHub and push this to the hub.

TASK DEFINITION

After we create and store our image, we need to create an Amazon ECS task definition. A task definition is a blueprint for our application. It is a text file in JSON format that describes the parameters and one or more containers that form our application. For example, we can use it to specify the image and parameters for the operating system, which containers to use, which ports to open for your application, and what data volumes to use with the containers in the task. The specific parameters available for our task definition depend on the needs of our specific application.

Steps:

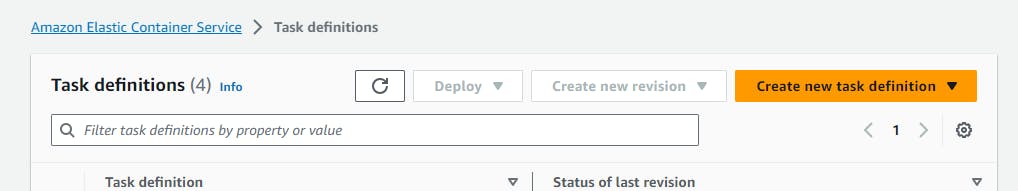

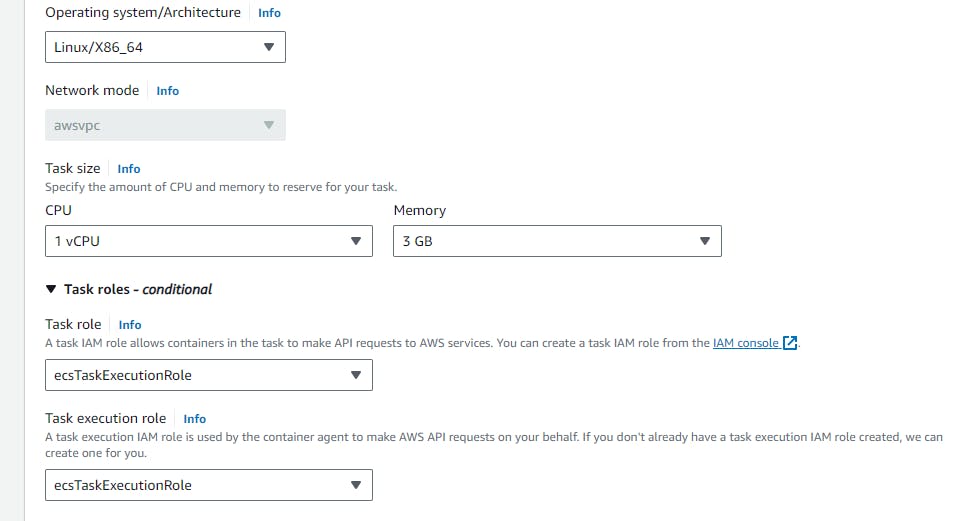

From your AWS console go to ECS service and click on task definition --> create new task definition

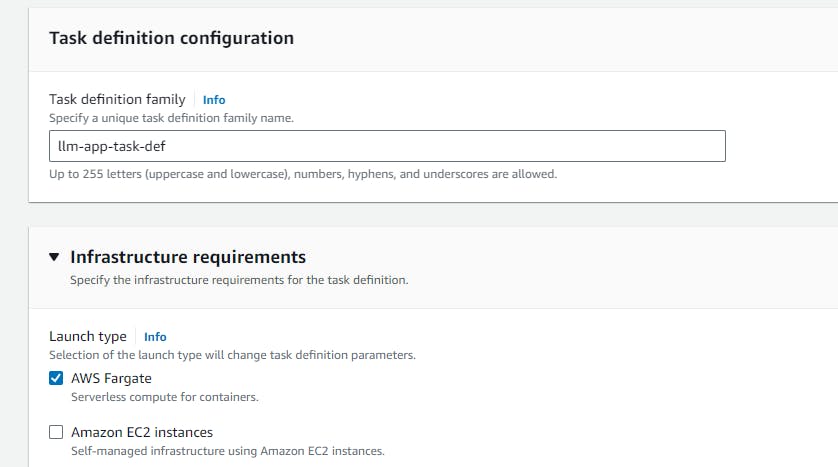

Give task definition name --> choose launch type as AWS Fargate

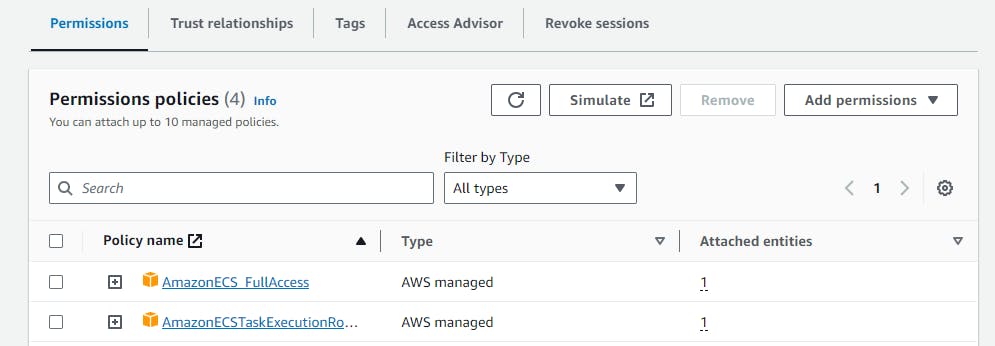

For this, let's go with the below details which are sufficient to run our application. For the task role, you can create ecsTaskExecutionRole and attach the below policy.

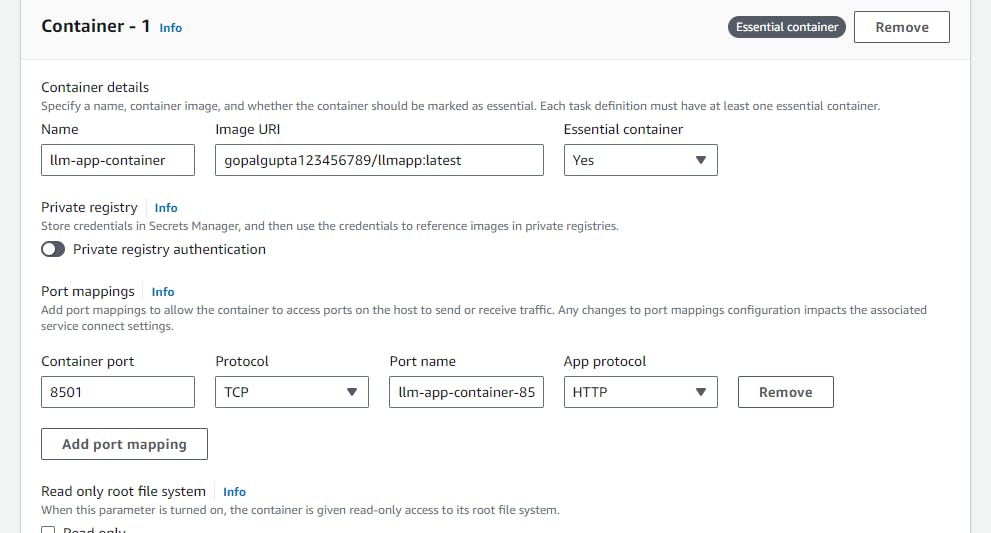

Give your container name and image uri as your dockerhub repository and the port in which your container is exposed as below shown.

In the Environment variables section provide your openai_api_key as shown below. Keep all things as default and hit on create.

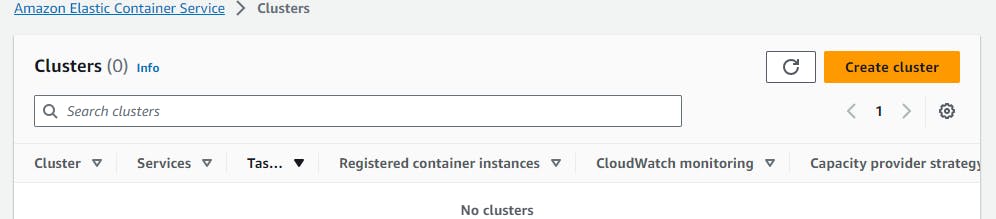

ECS Cluster:

After we define our task definition, we can deploy it as either a service or a task on our cluster. A cluster is a logical grouping of tasks or services that runs on the capacity infrastructure that is registered to a cluster.

A task is the instantiation of a task definition within a cluster. We can run a standalone task, or we can run a task as part of a service. We can use an Amazon ECS service to run and maintain our desired number of tasks simultaneously in an Amazon ECS cluster. How it works is that, if any of our tasks fail or stop for any reason, the Amazon ECS service scheduler launches another instance based on our task definition. It does this to replace it and thereby maintain our desired number of tasks in the service.

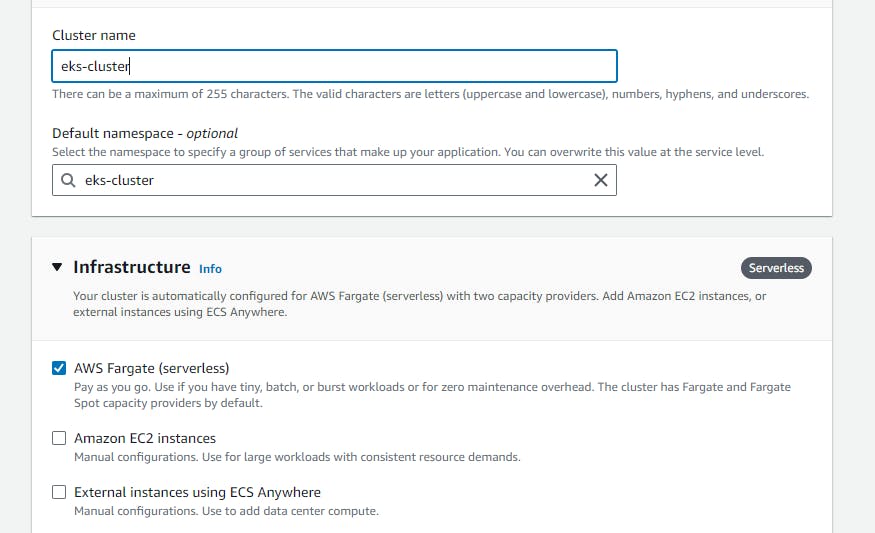

let's create an ECS cluster first.

Click on Create cluster.

Choose infrastructure as AWS fargate, keep all things as default and hit create.

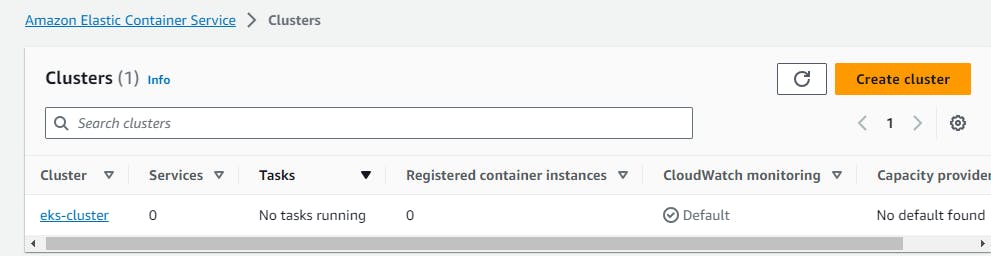

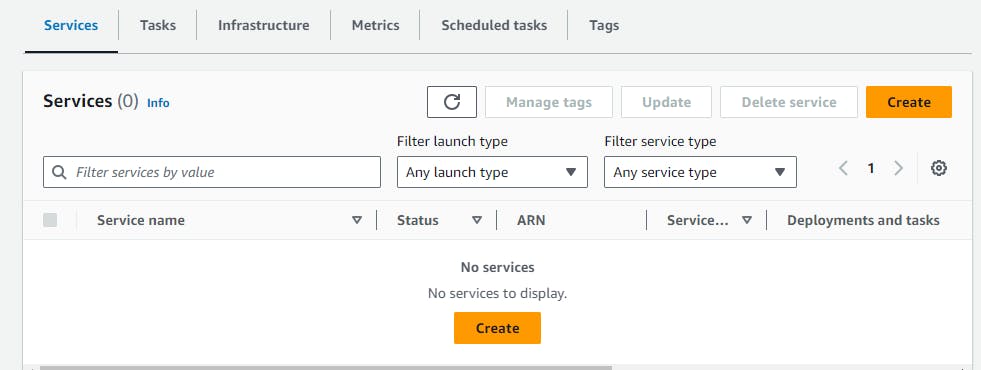

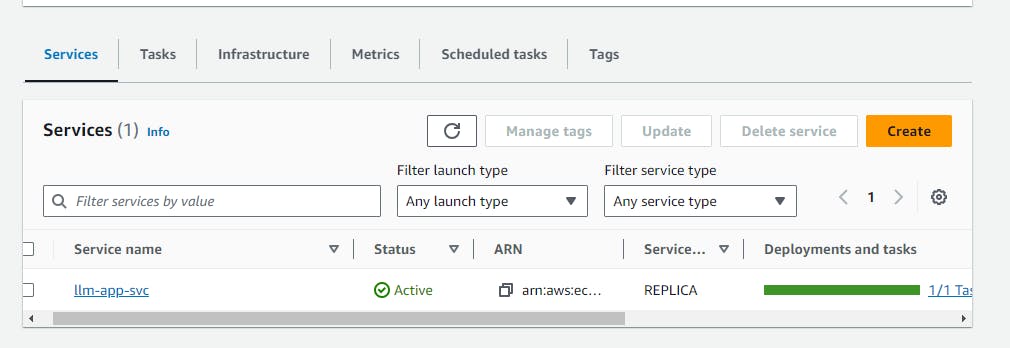

Once your cluster is ready, we can either create a task or service. Here we will be going with services.

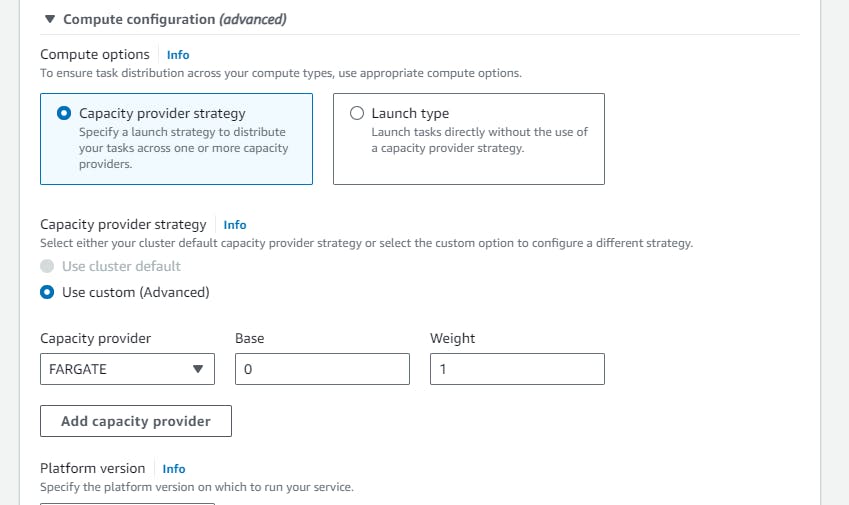

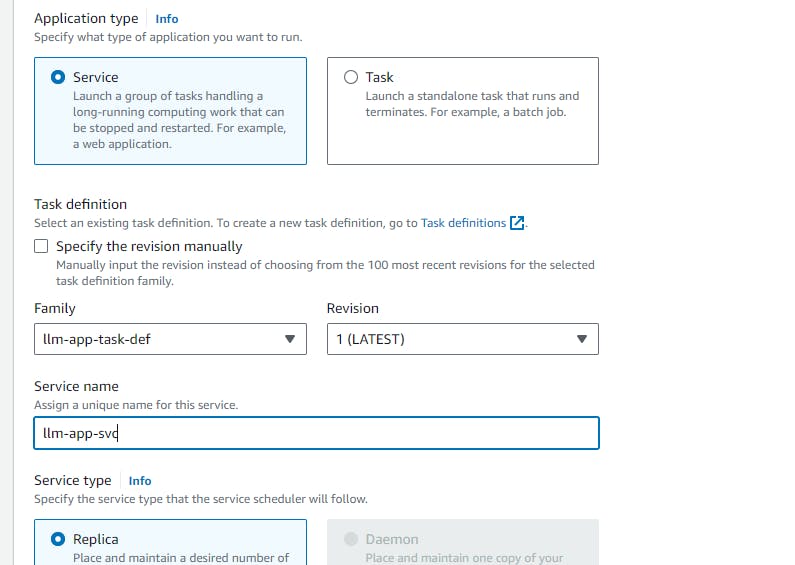

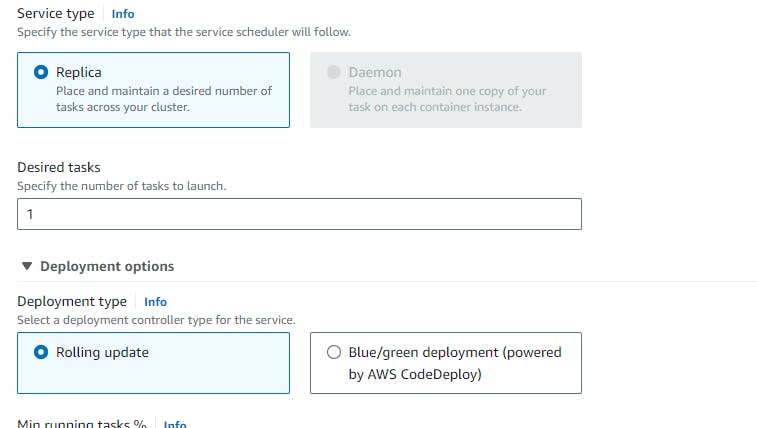

Go to your cluster --> Services --> create --> in the Deployment configuration select your task definition --> keep the desired task as 1 for now. You can create as many desired tasks as per your requirements.

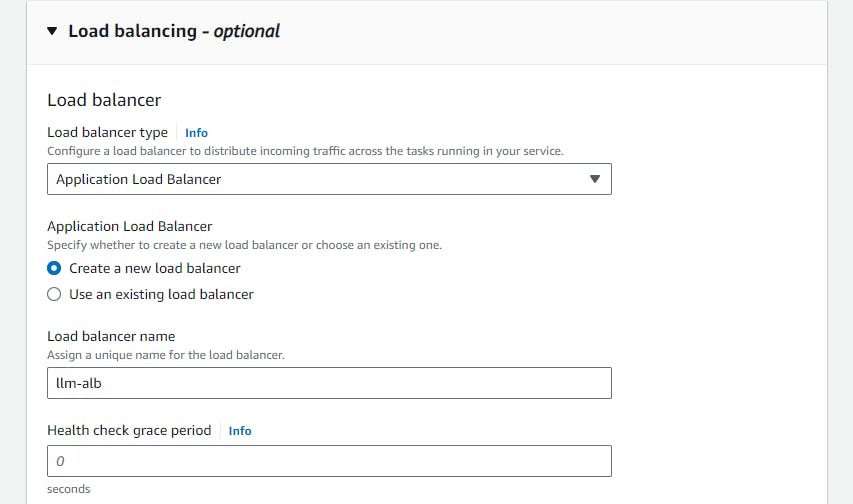

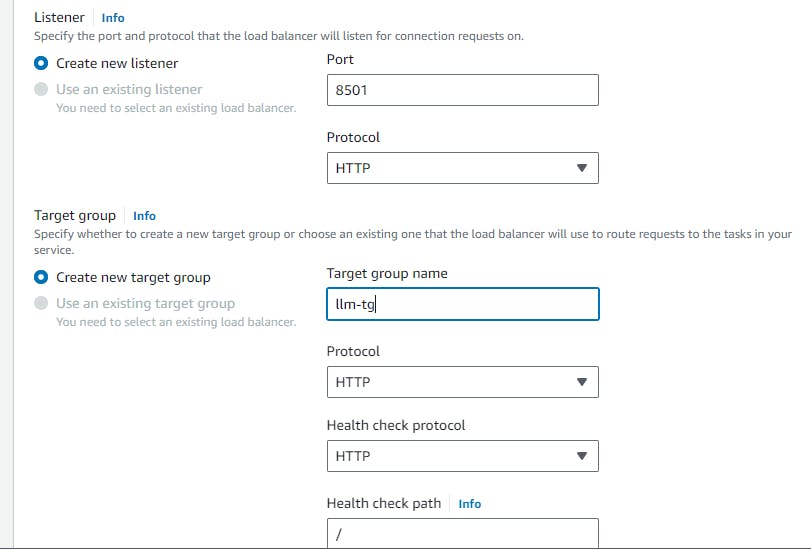

In the load balancer section --> application load balancer --> create a new load balancer --> create new listener --> at port 8501 and all as below details.

Keep all things as default and hit create.

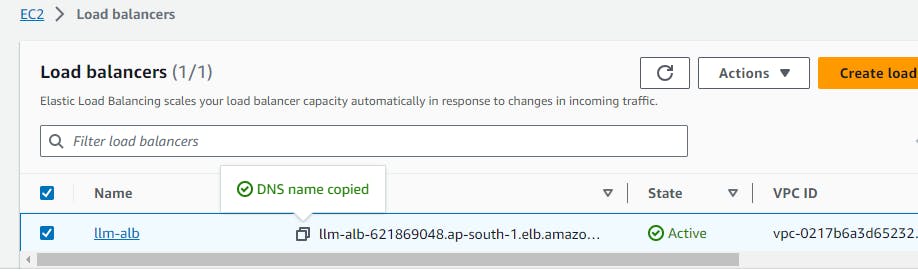

Once the application is ready ---> go to the load balancer and copy the ALB URL

Paste the ALB URL with port 8501 to access your application. Upload any pdf and query from the pdf.

----------- --- TADAAAA!!!!!!!!-----------

Thank You for going through my blog... Happy Learning!!!!!!!!

HUGE SHOUTOUT TO THE PROMT ENGINEERING YOUTUBE CHANNEL FOR THE SOURCE CODE.